What Is SwiGLU? How to Implement It? And Why Does it Work?

If you have been in the AI space lately specifically in Large Language Models, you might have seen the word "SwiGLU" thrown around here and there in the latest models and research paper.

In this article, we take a step back and get a closer look on what this SwiGLU is? How to code it? Why does it work?

What is SwiGLU?

In deep learning, we use neural networks to learn behaviours and patterns in our data. Neural network seems like a complicated word but it is actually a bunch of parameters that we multiply to our input and get a result, we keep tweaking these parameters until they learn something useful.

Depending on what we want to learn, multiplication and addition are not always enough to learn the representation in our data, to help our neural network we also introduce some other non-linear transformations that generalize to more complex patterns.

The transformations are called “Activation functions”. There exists many activation functions out there like Sigmoid, Tanh, ReLU, LeakyReLU, GeLU…

SwiGLU is one of these activation functions that was introduced in 2020 in this paper.

SwiGLU is actually composed of two words Swish and GLU. Let’s take a look at these first.

What is Swish Activation Function?

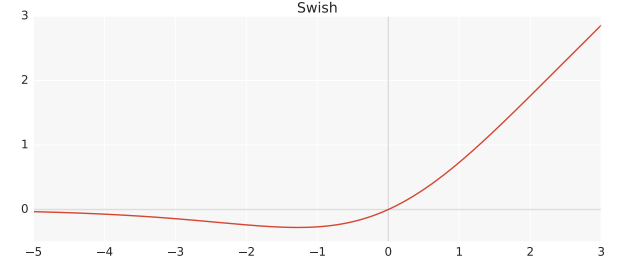

Swish is a non linear activation function that is defined as follows:

Swish(x) = x*sigmoid(ßx)

where ß is a learnable parameter. Swish can be better than ReLU activation function because it provides a smoother transition around 0, this can lead to better optimization.

What is Gated Linear Unit?

Gated linear units are neural network layer defined as the component wise product of two linear transformations, one of which is activated by sigmoid.

So what we said above translates to the following equation.

GLU(x) = sigmoid(W1x+b)⊗(Vx+c)GLUs have been found to be effective in capturing long-range dependencies in sequences while avoiding some of the vanishing gradient problems associated with other gating mechanisms like those in LSTMs and GRUs.

SwiGLU

Now that we know what Swish and GLU are, SwiGLU is a combination of both. It is a GLU but instead of having sigmoid as an activation function, it uses swish but with ß=1 so we end up with the following formula:

SwiGLU(x) = Swish(W1x+b)⊗(Vx+c)Now let’s construct a Feed Forward Network with SwiGLU function. We follow the transformer architecture and omit the biases terms.

FFNSwiGLU(x) = (Swish1(xW)⊗xV)W2How To Implement SwiGLU?

We can implement it like the following in Pytorch.

class SwiGLU(nn.Module):

def __init__(self, w1, w2, w3) -> None:

super().__init__()

self.w1 = w1

self.w2 = w2

self.w3 = w3

def forward(self, x):

x1 = F.linear(x, self.w1.weight)

x2 = F.linear(x, self.w2.weight)

hidden = F.silu(x1) * x2

return F.linear(hidden, self.w3.weight)Silu function that we are using here is the same as swish when ß=1.

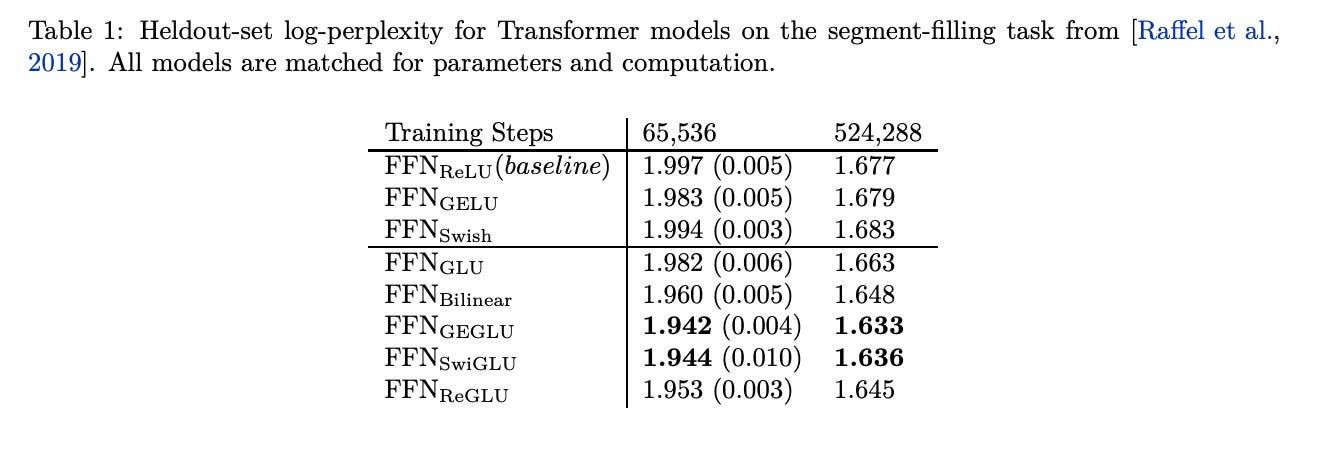

Looking at the results of a transformer that users SwiGLU compared to other GLU variants, we see that SwiGLU performs better during both pretraining.

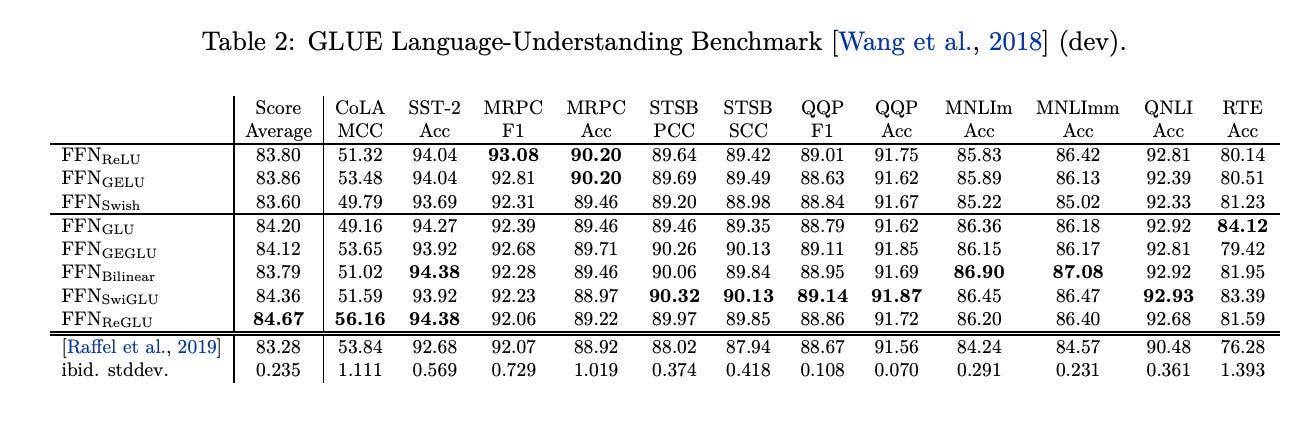

And downstream tasks.

Now this explains why many new LLMs like LLAMA, OLMO and PALM are adopting SwiGLU in their implementations.

Why Does It Work?

We offer no explanation as to why these architectures seem to work; we attribute their success, as all else, to divine benevolence.

I took that sentence directly from the research paper that introduced SwiGLU. This means there is still a lot to learn and figure out about deep learning and explainable AI.

At this moment we cannot pinpoint the reasons exactly why SwiGLU is performing better.

Clap, Follow and Comment if you like the article!

Stay In touch by connecting via LinkedIn Aziz Belaweid or GitHub.